Amazon dominated the first cloud era. The AI boom has kicked off Cloud 2.0, and the company doesn't have a head start this time.

The Cloud 1.0 era was dominated by Amazon Web Services.

The generative AI boom has ushered in the Cloud 2.0 era.

Amazon doesn't have a head start this time, and needs help to catch Nvidia.

When you're building a house, you can do it in various ways.

You can build all the tools you need and then hike into a forest to chop down wood and turn that into your final structure.

Or, you can go to Home Depot and buy wood that's already been chopped down, along with all the nails, hammers and other equipment you need.

Or, you can pay a contractor to do most of the work.

Over the past 15 years, Amazon Web Services became the go-to contractor for anyone wanting to build something online. A website, an app, a business software service. Nowadays, very few developers venture into the digital forest and chop down their own semiconductors and build their own servers and data centers. AWS does it all for you, and provides most of the tools you need.

This is what turned AWS from a fringe idea in 2006 into a $80 billion business with juicy profit margins and a huge, sticky customer base. This is the essence of a technology platform.

The rise of generative AI has created what some technologists are calling Cloud 2.0. There's a new stack of hardware, software, tools, and services that will power AI applications for years to come. Which company will be the go-to contractor in this new world? When you're building your AI house, who will you turn to for all that digital wood and the right tools that just work well together to get the job done?

At the moment, AWS doesn't look like the leader here, while Nvidia has a massive head start.

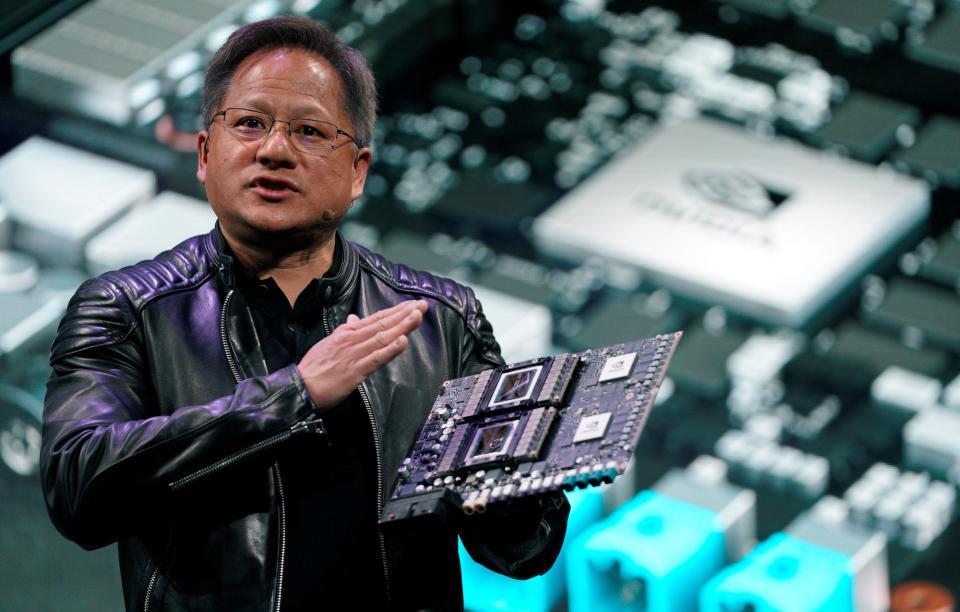

Nvidia designs and sells GPUs that are the go-to chip used by almost everyone who is creating large language models and other big AI models. These components were being used by AI researchers more than a decade ago when Andrew Ng and others discovered GPUs could handle many computations in parallel. In contrast, AWS launched its Trainium GPU for AI model training in December 2020, almost a decade later.

During that decade, Nvidia also built a platform around its GPUs that helped AI developers do their jobs. The company created compilers, drivers, an operating system, tools, code libraries, training notes and videos, and more. The core of this platform is a programming language called CUDA.

There are now more than 4 million registered Nvidia developers who use CUDA all its related tools. That's up from 2 million in August 2020, and 1 million two years before that. The closest thing AWS has right now is called Neuron. This was released in 2019, according to its Github page. CUDA came out in 2007.

Libraries, models, and frameworks

Michael Douglas, a former Nvidia executive, spoke to Bernstein analysts recently about all this, explaining that the chipmaker's software and tools are far ahead of the competition:

There are 250 software libraries available to developers that sit on top of CUDA. A library is pre-built code that performs a function like handling video or audio or doing something in graphics.

There are also 400 pre-built models that Nvidia offers developers for AI tasks such as image recognition and voice synthesis.

These libraries and models are weaved together with popular software frameworks such as PyTorch, TensorFlow, Windows and VMware.

This all sits in stacks on top of 65 platforms. A platform provides most of the other software developers need to operationalize something in an environment in a data center, or at the edge, with AI.

"What that means is that, it's like if you want to build a house, the difference between going into the forest and having to cut down your own trees and make your own tools or going into Home Depot, where you buy the lumber and the tools and get everything to work together," Douglas said.

Cloud 2.0

Another key point here: Most AI developers already know how to use CUDA and Nvidia GPUs. All the hacks, tricks, and other shortcuts are embedded in the community.

Arguably, Nvidia has already created an AI cloud platform – as AWS once did for the Cloud 1.0 era. As we move into Cloud 2.0, this will be hard for AWS, or anyone else, to break.

"Nvidia has been betting on AI for a long time. They have a level of software maturity that no other provider can match," said Luis Ceze, CEO and cofounder of AI startup OctoML. "With custom silicon for AI, there's a strong need for a mature and easy-to-use system software stack."

The Amazon-Anthropic feedback loop

Don't count AWS out yet, though. It's early days, as Andy Jassy and Adam Selipsky keep saying. James Hamilton is an AWS cloud infrastructure genius who can take on Nvidia, even if the chipmaker has a major head start.

"Nvidia has a lock on the market due to advantages in software," said Oren Etzioni, a technical director at the AI2 Incubator and a partner at Madrona Venture Group. "There's an argument that this window is starting to close."

The Cloud 2.0 race really kicked off in late September when Amazon announced an investment of up to $4 billion in an AI startup called Anthropic. This relatively small San Francisco-based company is packed with researchers who helped create the core GPT technology at the heart of today's generative AI boom. Anthropic has one of the most capable large AI models out there, called Claude.

Anthropic will now use AWS's in-house Trainium and Inferentia chips to build, train, and deploy its future AI models, and the two companies will collaborate on the development of future Trainium and Inferentia technology.

A key part here is the feedback loop between Anthropic and AWS. Those AI pioneers at Anthropic are now committed to using AWS's GPUs, along with all the related software and tooling. If AWS is lacking here, Anthropic can help identify the biggest holes and suggest ways of filling them.

To catch Nvidia, AWS really needed a marquee developer like this. All it took was $1 billion to $4 billion.

"Amazon needs a major AI player using its own silicon," said Ceze. "Amazon being able to show Anthropic running their workloads on Amazon chips is a win. They want other folks building AI models to feel confident that the silicon is good and the software is mature."

Avoiding the Nvidia tax

Making your own AI chips is a really important advantage for winning the Cloud 2.0 race. If AWS has to keep buying Nvidia GPUs, it will have to pay a margin to Nvidia. (Some AI experts call this the Nvidia tax). That will make AWS's Trainium and Inferentia cloud services more expensive to run.

If, instead, AWS can make its own AI chips, then it gets to keep that Nvidia profit margin for itself. That means it can offer AI training and inference cloud services that are more cost effective.

At a recent AWS event in Seattle, Selipsky, AWS's CEO, hammered this message home: AWS's AI cloud services will be competitive based on "price performance." This means it should be more efficient to build AI models on AWS, according to the CEO.

In the Cloud 1.0 era, AWS pulled this off partly through the acquisition Annapurna Labs in 2015. This startup helped Amazon design its own Gravitron CPUs for its cloud data centers. Suddenly, AWS no longer needed to buy as many expensive Xeon chips from Intel. This was an important element that made AWS such a competitive and successful cloud provider.

How easy it is to switch from Nvidia?

Here's the problem though: You can have great AI chips and great prices, but will anyone switch from using Nvidia GPUs and the CUDA platform? If they don't, AWS might not win Cloud 2.0 in the way it did with Cloud 1.0.

This brings us back to the house-building analogy. At the moment, Nvidia probably offers the best combination of wood and tools to get the job done. If you're already using this, can you switch easily?

I asked Sharon Zhou, cofounder of Lamini AI. Her startup spent months building a data center from scratch to help customers train AI models. When it was unveiled in September, the surprise was that Lamini used AMD GPUs rather than Nvidia chips.

How was the switch? Zhou said Lamini didn't even try because it would have been so hard.

"We never switched. We started the company on AMD. That's why it works," she said. "Switching would be more difficult than starting from scratch. If you want to switch, you'd have to apply a major refactor to your entire codebase."

She also went back to one of the same points that Douglas, the former Nvidia exec, made: The AI libraries and other frameworks and tooling around CUDA are just more developed.

"Libraries are heavily integrated with Nvidia today," Zhou said. "You need to reimplement fundamental abilities to work with large language models. Outside of the fundamentals, you need to do so for other common libraries, too."

Nvidia's lock-ins

Etzioni said Nvidia still has 2 main lock-ins with AI developers.

The first is that PyTorch, a popular AI framework, is optimized really well for CUDA and therefore Nvidia GPUs. OctoML, the startup Ceze runs, is working on a bunch of gnarly technical fixes that it hopes will make it easier for AI developers to use any GPU with different frameworks and platforms in the future, including AWS's offerings.

Second, once you can efficiently connect to another AI software stack, that alternative platform has to be at least as good as CUDA. "And the alternatives are just not there yet," Etzioni said.

AMD may be the closest to Nvidia at the moment, according to Etzioni. That chipmaker has ROCm, a software platform that gives AI developers access to popular languages, libraries and tools.

He didn't mention AWS or Trainium, though.

Read the original article on Business Insider