The Myth of the Innovator Hero

Every successful modern e-gadget is a combination of components made by many makers. The story of how the transistor became the building block of modern machines explains why.

WIKIPEDIA: Steve Jobs, Thomas Edison, Benjamin Franklin

We like to think that invention comes as a flash of insight, the equivalent of that sudden Archimedean displacement of bath water that occasioned one of the most famous Greek interjections, εὕρηκα. Then the inventor gets to rapidly translating a stunning discovery into a new product. Its mass appeal soon transforms the world, proving once again the power of a single, simple idea.

But this story is a myth. The popular heroic narrative has almost nothing to do with the way modern invention (conceptual creation of a new product or process, sometimes accompanied by a prototypical design) and innovation (large-scale diffusion of commercially viable inventions) work. A closer examination reveals that many award-winning inventions are re-inventions.

Most scientific or engineering discoveries would never become successful products without contributions from other scientists or engineers. Every major invention is the child of far-flung parents who may never meet. These contributions may be just as important as the original insight, but they will not attract public adulation. They will not be celebrated by media, and they will not be rewarded with Nobel prizes. We insist on celebrating lone heroic path-finders but even the most admired, and the most successful inventors are part of a more remarkable supply chain innovators who are largely ignored for the simpler mythology of one man or one eureka moment.

THE BIRTH OF MODERN ELECTRONICS

Where great ideas really come from. A special report

Perhaps nothing explodes the myth of the Lonely Innovator Hero like the story of modern electronics. To oversimplify a bit, electronics works though the switching of electronic signals and the amplification of their power and voltage. In the early years of the 20th century, switching and amplification was done (poorly) with vacuum tubes. In the middle of the 20th century, it was done more efficiently by transistors. Today, most of this work is done on microchips (large numbers of transistors on a silicon wafer), which became the basic building block of modern electronics, essential for not only computers and cellphones but also products ranging from cars to jetliners. All of these machines are now operated and controlled by -- simply stated -- the switching and amplification of electronic signals.

The dazzling and oversimplified story about electronics goes like this: The transistor was discovered by scientists at Bell Labs in 1947, leading directly to integrated circuits, which in turn led straight to microprocessors whose development brought us microcomputers and ubiquitous cellphones.

The real story is more complicated, but it explains how invention really happens -- through a messy process of copy, paste, and edit. The first transistor was patented 20 years before the Bell Labs scientists, in 1925 by Julius Lilienfeld. In 1947, Walter Brattain and John Bardeen amplified power and voltage using a germanium crystal but their transistor -- the point-contact transistor -- did not become the workhorse of modern electronics. That role has been played by the junction field-effect transistor, which was conceptualized in 1948 and patented in 1951 by William Shockley (seen in next photo). Today, even the Bell System Memorial site concedes that "it's perfectly clear that Bell Labs didn't invent the transistor, they re-invented it."

Moreover, germanium -- the material used in the epochal 1947 transistor -- did not become the foundation of modern electronics. That would be silicon, since the element is roughly 150,000-times more common in the Earth's crust than germanium.

HOW SILICON CHANGED EVERYTHING

This is where another essential invention comes into the story. Semiconductor-grade silicon must be ultrapure before doping, or adding tiny amounts of impurities to change its conductivity.

The number of transistors on a chip went from 2,300 in 1971 to 1 million by 1990 to 2 billion by 2010

In order to lower the production costs of silicon wafer, a crystal from which the wafers are sliced must be relatively large. These requirements led to new ways of silicon purification (purity of 99.9999% is common) and to ingenious methods of growing large crystals, both being enormous technical accomplishments in their own right. The story of crystal-making began in 1918 when Jan Czochralski, a Polish metallurgist, discovered how to convert extremely pure polycrystalline material into a single crystal; procedures for growing larger crystals were introduced in the early 1950s by Gordon Teal and Ernest Buehler at the Bell Labs. Soon afterwards Teal became the chief of R&D at Texas Instruments where a team led by Willis Adcock developed the first silicon transistor in 1954.

In May 1954, at a meeting of the Institute of Radio Engineers National Convention in Dayton, Teal demonstrated another advantage of silicon. After he announced that his company made first silicon transistors, Teal turned and switched on an RCA 45-rpm turntable, playing the swinging sounds of Artie Shaw's "Summit Ridge Drive." The germanium transistors in the amplifier of the record player were dunked in a beaker of hot oil, and the sound died away as the devices failed from the high temperature. Then Teal switched over to an identical amplifier with silicon transistors, placed it in the hot oil, and the music played on.

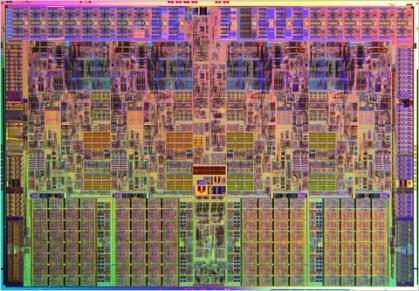

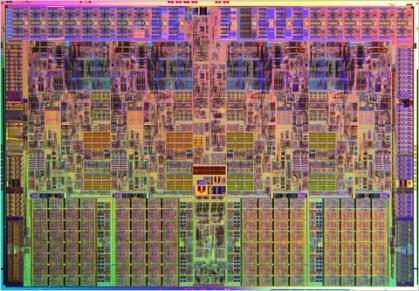

The relentless quest for larger crystals was on as generations of researchers pushed the diameter from the original half an inch to 12 inches and lowered the unit production costs. Availability of ultra-pure silicon opened the way to crowding tiny transistors on silicon wafers in order to make first integrated circuits (Robert Noyce and Jack Kilby in 1959). Then a way to deposit one atomic layer of silicon crystals had to be found (Bell Lab group led by Alfred Cho did that in 1968) before a group at Intel (Marcian Hoff, Stanley Mazor and Federico Faggin as the main protagonists) could make its first microprocessor (essentially a computer on a chip, seen in the photo) in 1971, for a Japanese programmable calculator.

The small armies of scientists and engineers employed by Intel and other chipmakers had to come up with new ways to place larger numbers of transistors on a chip. Their total increased from just 2,300 transistors in 1971, to more than one million by 1990, and to more than two billion by 2010. And, obviously, crowding all of these transistors in order to make computers on a chip would be useless unless somebody wrote a machine language that could be used to impart the processing instructions. The man who made some of the most fundamental contributions in that regard was Dennis Ritchie, the creator of C, a language that eventually led to Java and UNIX.

THE HERO MYTH

Ritchie died a few days after Steve Jobs. There were no TV specials, no genius epithets on cable news. Ritchie -- much like Czochralski, Lilienfeld, Teal, Frosch, Noyce, Cho, Hoff or Fagin -- was "just" one of hundreds of innovators whose ideas, procedures and designs, aided and advanced by cooperative efforts of thousands of collaborators and decades-long investment of hundreds of billions of dollars, had to be combined in order to create an entirely new e-world.

These aren't the exceptions. Collaboration and augmentation are the foundational principles of innovation. Modern path-breaking invention/innovation is always a process, always an agglomerative, incremental and cooperative enterprise, based on precedents and brought to commercial reality by indispensable contributions of other inventors, innovators and investors. Ascribing the credit for these advances to an individual or to a handful of innovators is a caricature of reality propagated by a search for a hero. The era of heroic inventions has been over for generations. A brilliant mind having a eureka moment could not create an Intel microprocessor containing a billion transistors any more than one person could dream up a Boeing 787 from scratch.

Eureka moments do exist, and some inventors have made truly spectacular individual contributions. But we pay too much attention to a few individuals and too little attention to the many moments of meaningful innovation that come next.

More From The Atlantic